Hey pupils! Welcome to the next session on modern neural networks. We are studying the basic neural networks that are revolutionizing different domains of life. In the previous session, we read the Deep Q Networks (DQN) Reinforcement Learning (add link). There, the basic concepts and applications were discussed in detail. Today, we will move towards another neural network, which is an improvement in the deep Q network and is named the double deep Q network.

In this article, we will point towards the basic workings of DQN as well so I recommend you read the deep Q networks if you don’t have a grip on this topic. We will introduce the DDQN in detail and will know the basic needs for improvement in the deep Q network. After that, we’ll discuss the history of these networks and learn about the evolution of this process. In the end, we will see the details of each step in the double-deep Q network. The comparison between DQN and DDQN will be helpful for you to understand the basic concepts. This is going to be very informative so let’s start with our first topic.

What is a Double Deep Q Network?

The double deep Q network is the advanced form of the Dqqp Q Network (DQN). We know that DQN was the revolutionary approach in Atari 2600 games because it utilizes the deep learning algorithm to learn from the simple raw game input. As a result, it provides a super human-like performance in the games. Yet, in some situations, the overestimation was observed in the action’s value; therefore, a suboptimal situation is observed. After different research and feedback from the users, the Double Deep Q Learning method was introduced. The need for the double deep Q network will be understood by studying the history of the whole process.

History of Double Deep Q Network

The history of the double deep Q network is interwoven with the evolution process of deep reinforcement learning. Here is the step-by-step history of how the double deep Q network emerged from the DQN.

Rise of QDN

In 2013, a researcher from Google DeepMind named Volodymyr Mnih and the team published a paper in which they introduced deep networks. According to the paper, the Deep Q network (DQN) is a revolutionary network that combines neural networks and reinforcement learning together.

The DQN made an immediate impact on the game industry because it was so powerful that it could surpass all the human players. Different researchers moved towards this network and created different applications and algorithms related to it.

Limitations of DQN

The DQN gained fame soon and attracted a large audience, but there were some limitations to this neural network. As discussed before, the overestimation bias of DQN was the problem in some cases that led the researchers to make improvements in the algorithm. The overestimation was in the case of action values and it resulted in slow convergence in some specific scenarios.

First Introduction to DDQN

In 2015, a team of scientists introduced the Double Deep Q Network as an improvement of its first version. The highlighted names in this research are listed below:

Ziyu Zhang

Terrance Urban

Martin Wainwright

Shane Legg (from Deep Mind)

They have improved it by applying the decoupling of action selection and action evaluation processes. Moreover, they have paid attention to deep reinforcement learning and tried to provide more effective performance.

First Impression of DDQN

The DDQN was successful in providing a solid impact on different fields. The DQN was impactful on the Ataari 2600 games only but this version has applications in other domains of life as well. We will discuss the applications in detail soon in this article.

The details of evolution at every step can be examined through the table given here:

Event |

Date |

Description |

Deep Q-Networks (DQN) Introduction |

2013 |

|

DQN Limitations Identified |

Late 2010s |

|

Double Deep Q-Networks (DDQN) Proposed |

2015 |

To address DQN's overestimation bias, Ziyu Zhang, Terrance Urban, Martin Wainwright, and Shane Legg propose DDQN. |

DDQN Methodology |

2015 |

DDQN employs two Q-networks

It effectively reduces overestimation bias through decoupling. |

DDQN Evaluation |

2015-2016 |

|

DDQN Applications |

2016-Present |

DDQN's success paves the way for its application in various domains, including:

|

DDQN Legacy |

Ongoing |

DDQN's contributions have established deep reinforcement learning (DRL) as a powerful tool for solving complex decision-making problems in real-world applications. |

How Does DDQN Work?

The working mechanism of the DDQN is divided into different steps. These are listed below:

Action Selection and Action Evaluation

Q value Estimation Process

Replay and Target Q-network Update

Main Q-network Update

Let’s find the details of each step:

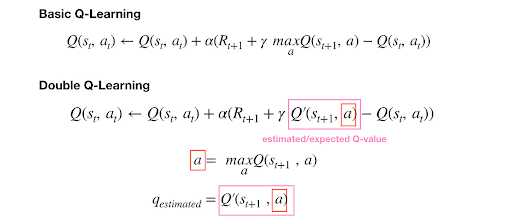

Q value Estimation Process

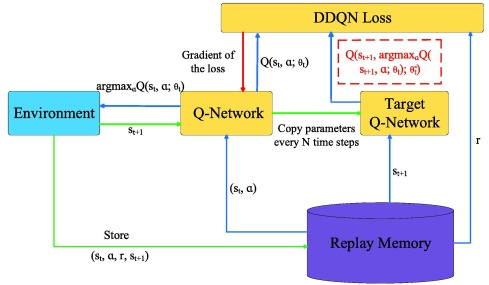

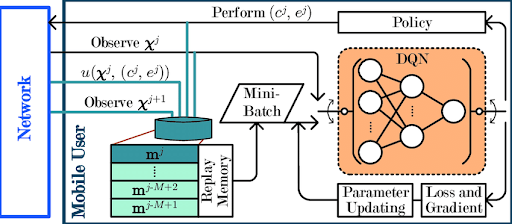

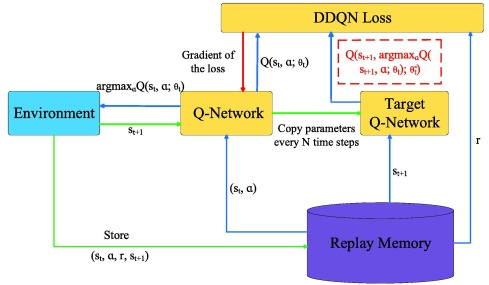

The DDQN has improved its working because it combines the action selection and action evaluation processes. For this, the DDQN has to use two separate Q networks. Here are the details of this network:

Main Q Network in DDQN

The main Q network is responsible for the selection of the particular action that has the highest prediction Q value. This value is important because it is considered the expected future reward of the network for the particular state.

Targeted Q Network

It is a copy of the main Q network and it is used to evaluate the Q values the main network predicts. In this way, the Q values are passed through two separate networks. The difference between the workings of these networks is that this network updates less frequently and makes the values more stable; therefore, these values are less overestimated.

Q-value Estimation and Action Selection

The following steps are carried out in the Q value estimation selection:

The first step is searching for state representation. The agent works and gets the state representation from the environment. This is usually in the form of visual input or some numerical parameters that will be used for further processing.

This state representation move is fed into the main Q network as an input. As a result of different calculations, the output values for the possible action are shown.

Now, among all these values, the agent selects the one Q value from the main Q value that has the highest prediction.

Replay and Target Q-network Update

The values in the previous step are not that efficient. To refine the results, the DDQN applies the experience replay. It uses reply memory and random sampling to store past data and update the Q networks. Here are the details of doing this:

First of all, the agent interacts with the environment and collects a stream of experiences. Each of the streams has the following information:

The current state of the network

Action taken

The reward received in the network

The next state of the network

The results obtained are stored in replay memory.

The random batch of values from the memory is sampled at regular intervals. In this way, the evaluation of the action's performance is updated for each experience. It is done to get the Q values of the actions.

Main Q-network Update

The target Q network updates the whole system by providing the accumulative errors therefore, the main Q network gets frequent updates and as a result, better performance is seen. The main Q network gets continuously learns and this results in better Q value updates.

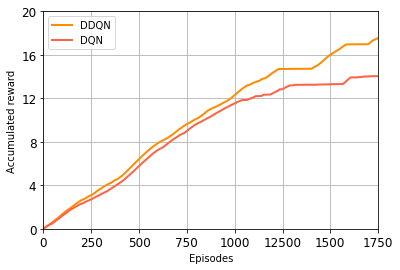

Comparison of DQN and DDQN

Both of these networks are widely used in different applications of life but the main purpose of this article is to provide the best information regarding the double deep Q networks. This can be understood by comparing it with its previous version which is a deep Q network. In research, the difference between the cumulative reward at periodic intervals is shown through the image given next:

Here is the comparison of these two on the basis of fundamental parameters that will allow you to understand the need of DDQN:

Overestimation Bias

As discussed before, the basic point where these two networks are differentiated is the overestimation bias. Here is a short recap of how these two networks work with respect to this parameter:

The traditional DQN is susceptible to overestimation bias therefore, Q values are overestimated and result in suboptimal policies.

The double deep Q networks are designed to deal with the overestimation and provide an accurate estimation of Q values. The separate channels to deal with the action selection and evaluation help it to deal with the overestimation.

The presence of two networks not only helps in the overestimation but also in problems such as action selection and evaluation, Q value estimation, etc.

Stability and Convergence

In DQN, the overestimation results in the instability of the results at different stages which can cause the convergence in the overall results.

To overcome this situation, in DDQN, a special mechanism helps to improve the stability and as a result, better convergence is seen.

Target Network Update in Q Networks

The deep Q networks employ the target network for the purpose of training stabilisation. However these target networks are directly used for the action selection and evaluation therefore, it has less accuracy.

The issue is solved in DDQN because of the periodic updations and it is done with the parameter of the online network. As a result, a stable training process provides better output in DDQN.

Performance of DQN VS DDQN

The performance of DQN is appreciable in different fields of real life. The issue of overestimation causes errors in some cases. So, it has a remarkable performance as compared to different neural networks but less than the DDQN.

In DDQN, fewer errors are shown because of the better network structure and working principle.

Here is the table that will highlight all the points given above in just a glance:

Feature |

DQN |

DDQN |

Overestimation Bias |

Prone to overestimation bias |

Effectively reduces overestimation bias |

Stability and Convergence |

Less stable due to overestimation bias |

More stable due to target Q-network |

Target Network Update in Q Networks |

Direct use of target network for action selection and evaluation |

Periodic updates of the target network using online network parameters |

Overall Performance |

Remarkable performance but prone to errors due to overestimation |

Superior performance with fewer errors |

Additional Parameters |

N/A |

Reduced overestimation bias leads to more accurate Q-value estimates |

The applications of both these networks seem alike but the basic difference is the performance and accuracy.

Hence, the double deep Q network is an improvement over the deep Q networks. The main difference between these two is that the DDQN has less overestimation of the action’s value. This makes it more suitable for different fields of life. We started with the basic introduction of the DDQN and then tried to compare it with the DQN so that you may understand the need for this improvement. After that, we read the details of the process carried out in DDQN from start to finish. In the end, we saw the details of the comparison between these two networks. I hope it was a helpful article for you. If you have any questions, you can ask them in the comment section.