Hello readers! Welcome to the next episode of the Deep Learning Algorithm. We are studying modern neural networks and today we will see the details of a reinforcement learning algorithm named Deep Q networks or, in short, DQN. This is one of the popular modern neural networks that combines deep learning and the principles of Q learning and provides complex control policies.

Today, we are studying the basic introduction of deep Q Networks. For this, we have to understand the basic concepts that are reinforcement learning and Q learning. After that, we’ll understand how these two collectively are used in an effective neural network. In the end, we’ll discuss how DQN is extensively used in different fields of daily life. Let’s start with the basic concepts.

What is Reinforcement Learning?

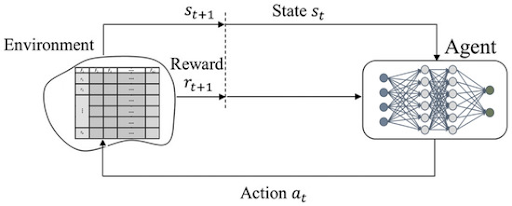

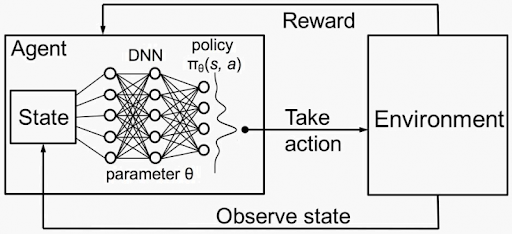

- Reinforcement learning is a subfield of machine learning that is different from other machine learning paradigms.

- It relies on the trial-and-error learning method and here, the agent learns to make decisions when it interacts with the environment.

- The agent then gets feedback in the form of rewards or penalties, depending on the result. In this process, the agent learns to have the optimal behavior to achieve the goals. In this way, it gradually learns to maximize the long-term reward.

Unlike this learning, supervised learning is done with the help of labeled data. Here are some important components of the reinforcement learning method that will help you understand the workings of deep Q networks:

Fundamental Components of Reinforcement Learning |

|

Name of Component |

Detail |

Agent |

An agent is a software program, robot, human, or any other entity that learns and makes decisions within the environment. |

Environment |

In reinforcement, the environment is the closed world where the agent operates with other things within the environment through which the agent interacts and perceives. |

Action |

The decision or the movement the agent takes within the environment at the given state. |

State |

At any specific time, the complete set of all the information the agent has is called the state of the system. |

Reward |

|

Policy |

A policy is a strategy or mapping based on the states. The main purpose of reinforcement learning is to design policies that maximize the long-term reward of the agent. |

Value Function |

It is the expectation of future rewards for the agent from the given set of states. |

Basic Concepts of Q Learning for Deep Q Networks

Q learning is a type of reinforcement learning algorithm that is denoted by Q(s,a). Here, here,

Q= Q learning function

s= state of the learning

a= action of the learning

This is called the action value function of the learning algorithm. The main purpose of Q learning is to find the optimal policy to maximize the expected cumulative reward. Here are the basic concepts of Q learning:

State Action Pair in Q Learning

In Q learning, the agent and environment interaction is done through the state action pair. We defined the state and action in the previous section. The interaction between these two is important in the learning process in different ways.

Bellman Equation in Q learning

The core update rule for Q learning is the Bellman equation. This updates the Q values iteratively on the basis of rewards received during the process. Moreover, future values are also estimated through this equation. The Bellman equation is given next:

Q(s,a)←(1−α)⋅Q(s,a)+α⋅[R(s,a)+γ⋅maxa′Q(s′,a′)]

Here,

γ = discount factor of the function which is used to balance between immediate and future rewards.

R(s, a) = immediate reward of taking the action “a” within the state “s”.

α= The learning rate that controls the step size of the update. It is always between 0 and maxa′Q(s′,a′) = The prediction of the maximum Q values over the next state s′ and action value a′

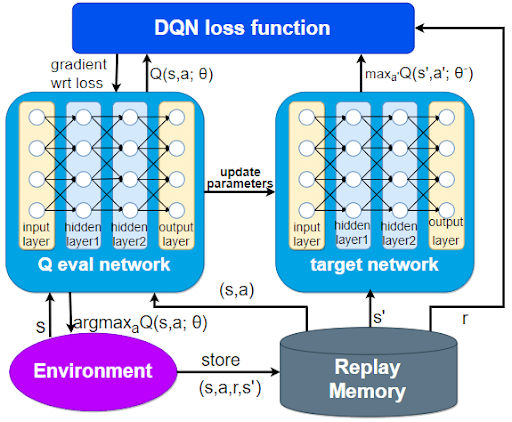

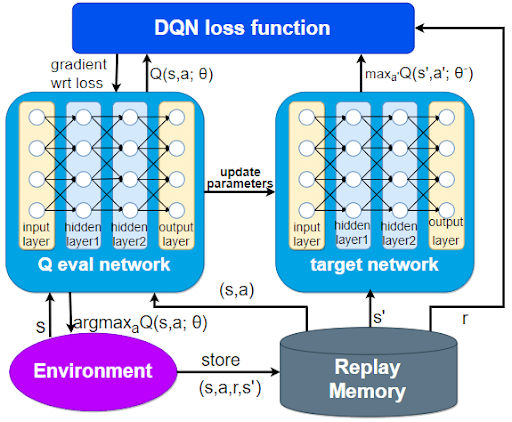

What is Deep Q Network (DQN)

The deep Q networks are the type of neural networks that provide different models such as the simulation of video games by using the Q learning we have just discussed. These networks use reinforcement learning specifically for solving the problem through the mechanism in which the agent sequentially makes a decision and provides the maximum cumulative reward. This is a perfect combination of learning with the deep neural network that makes it efficient enough to deal with the high dimensional input space.

This is considered the off-policy temporal difference method because it considers the future rewards and updates the value function of the present state-action pair. It is considered a successful neural network because it can solve complex reinforcement problems efficiently.

Applications of Deep Q Network

The Deep Q network finds applications in different domains of life where the optimization of the results and decision-making is the basic step. Usually, the optimized outputs are obtained in this network therefore, it is used in different ways. Here are some highlighted applications of the Deep Q Networks:

Atari 2600 Games

The Atari 2600 games are also known as the Atari Video Computer System (VCS). It was released in 1977 and is a home video controller system. The Atari 2600 and Deep Q Network are two different types of fields and when connected together, they sparked a revolution in artificial intelligence.

The Deep Q network makes the Atari games and learns in different ways. Here are some of the ways in which DQN makes the Atari 2600 train ground:

Learning from pixels

Q learning with deep learning

Overcoming Sparse Rewards

DQN in Robotics

Just like reinforcement learning, DQN is used in the field of robotics for the robotic control and manipulation of different processes.

It is used for learning specific processes in the robots such as:

Grasping the objects

Navigate to environments

Tool manipulation

The feature of DQN to handle the high dimensional sensory inputs makes it a good option in robotic training where these robots have to perceive and create interaction with their complex surrounding.

Autonomous Vehicles with DQN

The DQN is used in autonomous vehicles through which the vehicles can make complex decisions even in a heavy traffic flow.

Different techniques used with the deep Q network in these vehicles allow them to perform basic tasks efficiently such as:

Navigation of the road

Decision-making in heavy traffic

Avoid the obstacles on the road

DQN can learn the policies from adaptive learning and consider various factors for better performance. In this way. It helps to provide a safe and intelligent vehicular system.

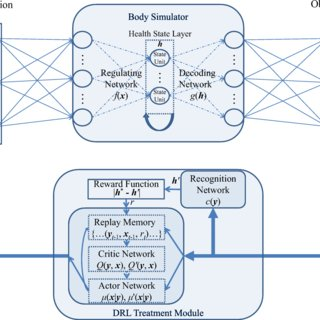

Healthcare and DQN

Just like other neural networks, the DQN is revolutionizing the medical health field. It assists the experts in different tasks and makes sure they get the perfect results. Some of such tasks where DQN is used are:

Medical diagnosis

Treatment optimization

Drug discovery

DQN can analyze the medical record history and help the doctors to have a more informed background of the patient and diseases.

It is used for the personalized treatment plans for the individual patients.

Resource Management with DQN

Deep Q learning helps with resource management with the help of policies learned through optimal resource management.

It is used in fields like energy management systems usually for renewable energy sources.

Deep Q Network in Video Streaming

In video streaming, deep Q networks are used for a better experience. The agents of the Q network learn to adjust the video quality on the basis of different scenarios such as the network speed, type of network, user’s preference, etc.

Moreover, it can be applied in different fields of life where complex learning is required based on current and past situations to predict future outcomes. Some other examples are the implementation of deep Q learning in the educational system, supply chain management, finance, and related fields.

Hence in this way, we have learned the basic concepts of Deep Q learning. We started with some basic concepts that are helpful in understanding the introduction of the DQN. These included reinforcement learning and Q learning. After that, when we saw the introduction of the Deep Q network it was easy for us to understand the working. In the end, we saw the application of DQN in detail to understand its working. Now, I hope you know the basic introduction of DQN and if you want to know details of any point mentioned above, you can ask in the comment section.