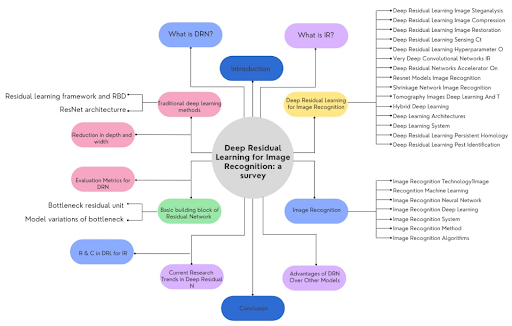

Hey readers! Welcome to the next lecture on neural networks. We are learning about modern neural networks, and today we will see the details of residual networks. Deep learning has provided us with remarkable achievements in recent years, and residual learning is one such output. This neural network has revolutionized the design and training process of the deep neural network for image recognition. This is the reason why we will discuss the introduction and all the content regarding the changes these network has made in the field of computer vision.

In this article, we will discuss the basic introduction of residual networks. We will see the concept of residual function and understand the need for this network with the help of its background. After that, we will see the types of skip connection methods for the residual networks. Moreover, we will have a glance at the architecture of this network and in the end, we will see some points that will highlight the importance of ResNets in the field of image recognition. This is going to be a basic but important study about this network so let’s start with the first point.

What is a Residual Neural Network?

Residual networks (ResNets) were introduced by Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun in 2015. They introduced the ResNets, for the first time, in the paper with the title “Deep Residual Learning for Image Recognition”. The title was chosen because it was the IEEE Conference for Computer Vision and Pattern Recognition (CVPR) and this was the best time to introduce this type of neural network.

These networks have made their name in the field of computer vision because of their remarkable performance. Since their introduction into the market, these networks have been extensively used for processes like image classification, object detection, semantic segmentation, etc.

ResNets are a powerful tool that is extensively used to build high-performance deep learning models and is one of the best choices for fields related to images and graphs.

What is a Residual Function?

The residual functions are used in neural networks like ResNets to perform multiple functions, such as image classification and object detection. These are easier to learn than traditional neural networks because these functions don’t have to learn features from scratch all the time, but only the residual function. This is the main reason why residual features are smaller and simpler than the other networks.

Another advantage of using residual functions for learning is that the networks become more robust to overfitting and noise. This is because the network learns to cancel out these features by using the predicted residual functions.

These networks are popular because they are trained deeply without the vanishing gradient problem (you will learn it in just a bit). The residual networks allow smooth working because they have the ability to flow through the networks easily. Mathematically, the residual function is represented as:

Residual(x) = H(x) - x

Here,

- H(x) = the network's approximation of the desired output considering x as input

- x = the original input to the residual block

The background of the residual neural networks will help to understand the need for this network, so let’s discuss it.

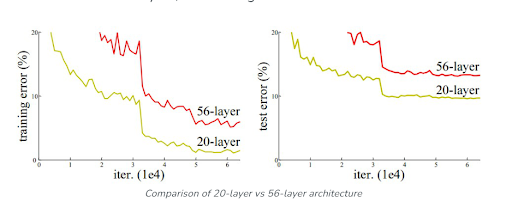

Background for Residual Neural Network

In 2012, the CNN-based architecture called AlexNet won the ImageNet competition, and this led to the interest of many researchers to work on the network with more layers in the deep learning neural network and reduce the error rate. Soon, the scientists find that this method is suitable for a particular number of layers, and after that limit, the gradient becomes 0 or too large. This problem is called the vanishing or exploding of the gradient. As a result of this process, the training and testing errors increase with the increased number of layers. This problem can be solved with residual networks; therefore, this network is extensively used in computer vision.

Skip Connection Method in ResNets

ResNets are popular because they use a specialized mechanism to deal with problems like vanishing/exploding. This is called the skip connection method (or shortcut connections), and it is defined as:

"The skip connection is the type of connection in a neural network in which the network skips one or more layers to learn residual functions, that is, the difference between the input and output of the block."

This has made ResNets popular for complex tasks with a large number of layers.

Types of Skip Connection in RestNets

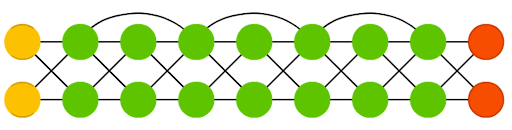

There are two types of skip connections listed below:

- A short connection is a more common type of connection in residual neural networks. This allows the network to learn the residual function at a rapid rate. In residual learning, these are used in the adjacent residual blocks. In this way, the network learns about the residual function within the block. An example of a short connection is that the residual block learns to add a small amount of noise to the input or can change the contrast of the input image through this.

- The long skip connection connects the input of the residual block to the output of the much later layer of the network. This network cannot work on a small scale but can add a small amount of noise to the entire image or change the contrast of the whole image. Thai allows the network to learn the long-range dependencies.

Both of these types are responsible for the accurate performance of the residual neural networks. Out of both of these, short skip connections are more common because they are easy to implement and provide better performance.

Architecture of Residual Networks

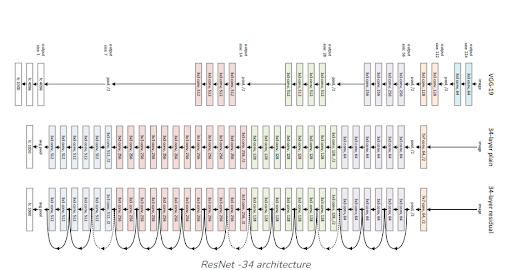

The architecture of these networks is inspired by the VGG-19 and then the shortcut connection is added to the architecture to get the 34-layer plain network. These short connections make the architecture a “residual network” and it results in a better output with a great processing speed.

Deep Residual Learning for Image Recognition

There are some other uses of residual learning, but mostly these are used for image recognition and related tasks. In addition to the skip connection, there are multiple other ways in which this network provides the best functionality in image recognition. Here are these:

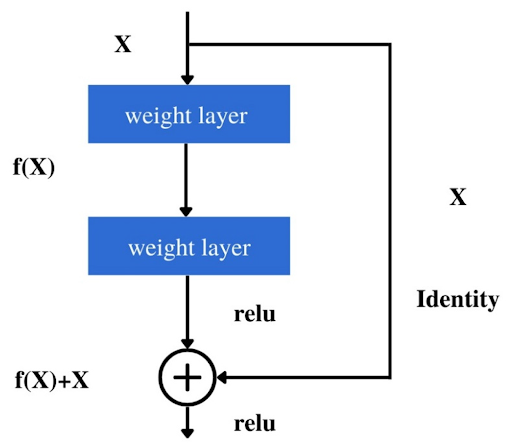

Residual Block

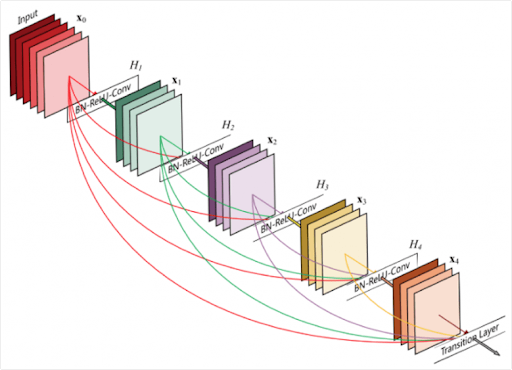

It is the fundamental building block of ResNets and plays a vital role in the functionality of a network. These blocks consist of two parts:

- Identity path

- Residual path

Here, the identity path does not involve any major processing, and it only passes the input data directly through the block. Whereas, the network learns to capture the difference between the input data and the desired output of the network.

Learning Residual

The residual neural network learns by comparing the residuals. It compares the output of the residual with the desired output and focuses on the additional information required to get the final output. This is one of the best ways to learn because, with every iteration, the results become more likely to be the targeted output.

Easy Training Method

The ResNets are easy to train, and the users can have the desired output in less time. The skip connection feature allows it to go directly through the network. This is applicable even in deep architecture, and the gradient can flow easily through the network. This feature helps to solve the vanishing gradient problem and allows the network to train hundreds of layers efficiently. This feature of training the deep architecture makes it popular among complex tasks such as image recognition.

Frequent Upadation of Weight

The residual network can adjust the parameters of the residual and identity paths. In this way, it learns to update the weights to minimize the difference between the output of the network and the desired outputs. The network is able to learn the residuals that must be added to the input to get the desired output.

In addition to all these, features like performance gain and best architecture depth allow the residual network to provide significantly better output, even for image recognition.

Conclusion

Hence, today we learned about a modern neural network named residual networks. We saw how these are important networks in deep learning. We saw the basic workings and terms used in the residual network and tried to understand how these provide accurate output for complex tasks such as image recognition.

The ResNets were introduced in 2015 at a conference of the IEE on computer vision and pattern recognition (CVPR), and they had great success and people started working on them because of the efficient results. It uses the feature of skip connections, which helps with the deep processing of every layer. Moreover, features like residual block, learning residuals, easy training methods, frequent updates of weights, and deep architecture of this network allow it to have significantly better results as compared to traditional neural networks. I hope you got the basic information about the topic. If you want to know more, you can ask in the comment section.