Hi readers! I hope you are doing great. We are learning about modern neural networks in deep learning, and in the previous lecture, we saw the capsule neural networks that work with the help of a group of neurons in the form of capsules. Today we will discuss the graph neural network in detail.

Graph neural networks are one of the most basic and trending networks, and a lot of research has been done on them. As a result, there are multiple types of GNNs, and the architecture of these networks is a little bit more complex than the other networks. We will start the discussion with the introduction of GNN.

Introduction to Graph Neural Networks

The work on graphical neural networks started in the 2000s when researchers explored graph-based semi-supervised learning in the neural network. The advancements in the studies led to the invention of new neural networks that specifically deal with graphical information. The structure of GNN is highly influenced by the workings of convolutional neural networks. More research was done on the GNN when the simple CNN was not enough to present optimal results because of the complex structure of the data and its arbitrary size.

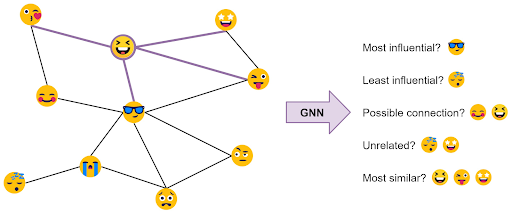

All neural networks have a specific pattern to deal with the data input. In graph neural networks, the information is processed in the form of graphs (details are in the next section). These can capture complex dependencies with the help of connected graphs. Let us learn about the graph in the neural network to understand its architecture.

Graphs in Neural Networks

A graph is a powerful representation of data in the form of a connected network of entities. It is a data structure that represents the complex relationship and interaction between the data. It consists of two parts:

Node

Edge

Let us understand both of these in detail.

Nodes in Graph

Here, nodes are also known as vertices, and these are the entities or data points in the graph. Simple examples of nodes are people, places, and things. These are associated with the features or attributes that describe the node, and these are known as node features. These features vary according to the type of graphical network. For instance, in the social network, the node is the user profile and the node features include its age, nation, gender, interests, etc.

Edges in Graph

Edges are the connections between the nodes, and these are also known as the connections, links, or relationships of the nodes. Edges may be directional or unidirectional in nature, and these play a vital role in the connection of one node to the other. The unidirectional nodes represent the relationship of one node to the other, and the undirected edges represent the bidirectional relationship between the nodes.

GNN Architecture

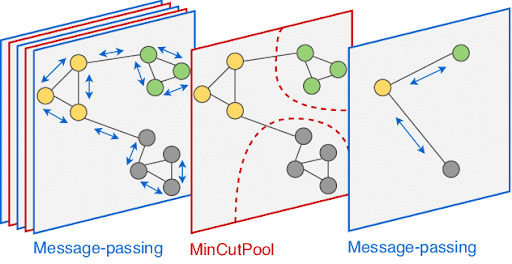

Just like other neural networks, the GNN also relies on multiple layers. In GNN, each layer is responsible for getting information from the neighbor node. It follows the message-passing paradigm for the flow of information; therefore, the GNN consists of inherited relationships and interactions among the graphs. In addition to nodes and edges, here are some key features to understand the architecture of GNN.

Message Passing Mechanism

The complex architecture of layers in the graph is responsible for the flow of information from node to node. The message-passing process is part of the information flow when every node interacts with each other to provide information, and as a result, the data is transformed into an informative message. The type of node is responsible for the particular information, and nodes are connected according to their node features.

mechanisms. The aggregation of the data is done through a weighted sum or even more complex mechanisms such as mean aggregation or attention-based aggregation.

Learnable Parameters

The GNN follows the learnable parameters just like some other neural networks. These are the weights and biases that are learned during the processes in the GNN. The state of each node is updated based on these parameters. In GNN, the learnable parameters have two properties:

- Edge weights are the importance of each edge in the GNN. A higher weight means more importance to that particular edge when the data is updated in the iteration.

- Before any node is updated, the biases are added to the nodes, which are an offset value of a constant number. These biases vary according to the importance and behavior of the nodes and account for their intrinsic properties.

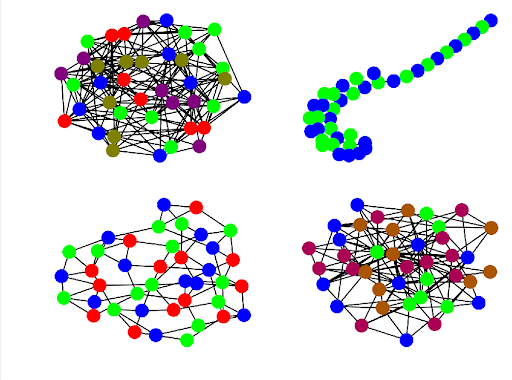

Types of GNN Architecutres

Since its introduction in the 2000s, continuous research and work have been done on the GNN. With the advent of research, there are multiple types of GNNs that are working in the market for particular tasks. Here are some important types of graphical neural networks:

Graph Convolutional Networks

The graph convolutional networks (GCN) are inspired by convolutional neural networks. These are the earliest and most widely used GNN variants. These networks can learn the data by applying the convolutions to the graph data. In this way, these can aggregate and update node representation by keeping track of their neighbor nodes.

Graph Recurrent Networks

These are inspired by recurrent neural networks and are also referred to as GRN. The basic use of these networks is in sequence modeling. These networks apply the recurrent operations to the graph data and learn features from it. These features are representative of the global structure.

Graph Attention Networks

The graph attention networks (GATs) introduce the attention mechanism in the GNNs. This mechanism is used to learn the weights of edges in the graph. This helps in the message passing because the nodes choose the relevant neighbors and it makes the overall working of the network easy. The GATs work perfectly in processes like node classifications and recommendations.

Graph Isomorphism Network

The graph isomorphism network was introduced in 2018, and it can produce the same output as the two isomorphic graphs. GINs focus on the structural information of graphs and apply premature invariant functions during the steps of message passing and node update. Each node represents its data, and the most likely connected nodes are aggregated to create a more powerful network.

GraphSAGE

GraphSAGE means graph sample and aggregated, which is a popular GNN architecture. It samples the local neighborhood of each node and aggregates its features. In this way, the detail of node data is easily represented, and as a result, scalability can be applied to large graphs. It makes graph learning tasks easy, such as the classification of nodes and link prediction.

Applications of GNNs

The large collection of types of GNN architecture allows it to perform multiple tasks. Here are some important applications of GNN in various domains:

Social Network Analysis

GNN has applications in social networks, where it can model relationships among network entities. As a result, it performs tasks such as link prediction, recommendation analysis, community detection, etc.

Medical Industry

The GNN plays an informative role in the medical industry in branches like bioinformatics and drug discovery. It is used in the prediction of the molecular properties of new drugs, the protein-protein interaction in the body and drugs, the formulation of new drugs based on experimentation, etc.

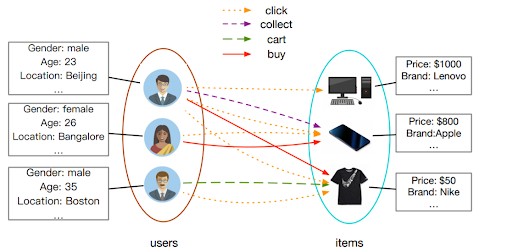

Recommendation System

The relationship between the graphs is a string in the GNNs, which makes it ideal for prediction and learning the interaction between the user and the items. Moreover, the graph structures are highly usable in the recommendation system of the items released for the users on different levels.

Hence, we have read the information about the graph neural networks. The basic unit of these networks is the graph, which has two parts nodes and edges. The relationship between different edges in the group is responsible for the functioning of GNN. We have seen the types of neural networks that are divided based on their mechanisms of working. In the end, we had an overview of the general applications of GNN. These are a little bit more complex neural networks as compared to other modern networks we have read in this series. In the next lecture, we will discuss another modern neural network.