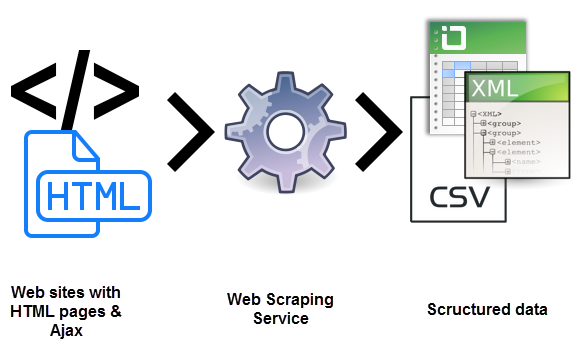

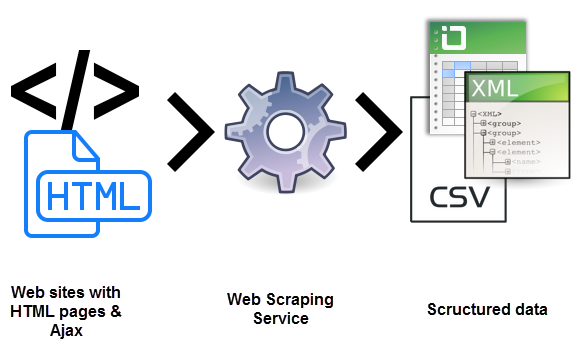

How is content scrapped?

A scrapper bot sends a sequence of HTTP GET requests to a web server. It then copies all the information sent in response and saves it. It repeatedly does this until all the content has been scrapped.

Scrappers can use JavaScript to download gated content or fill out every form on a website. The automation in browser programs and APIs allow the automated bots to interact with online infrastructures as they apply the traditional web browser techniques. They effectively trick the computer into thinking that the user accessing the content is a human.

The last way that content may be scrapped is by the actual user. They can decide to go through all your content and copy what they find useful or everything within a website. Unlike bots that do this in a matter of seconds, human beings can take days or hours exposing themselves to the risk of detection.

Why do scrappers steal your content?

There are various motives that an attacker may have to scrape your website content. The baseline is that scrappers can monetize your content or generate more traffic to them. Below are the main reasons for content scraping?

To generate leads

When an attacker is part of a small community and wants to seem like leaders in their field, they result in scrapping related content. When you search for an established company, you end up on their site because they have scrapped its content. This is called lead generation. It is common in legal practice and upcoming businesses.

Advertising revenue

Other people may have good intentions, like creating a knowledge hub—a one-stop place for users in a specific field. When you catch them in action, the reply is that they were doing it for the good of the community.

Commissions from affiliate marketing

Some other attackers use your content to make a few extra dollars through affiliate marketing. They combine the scrapped content with driving search engine traffic to their websites. These websites are usually used to promote certain products.

Techniques for content scraping protection

Content is at the heart of any online business. Therefore, you need to take proper care. By adopting measures to protect the content, you protect your business from the related risks and defend the reputation of your brand. When scraped, they post your content elsewhere. Among the other ways, Really Simple Syndication (RSS) is the most commonly used scrapping method. With all the risks and disadvantages of content scrapping in mind, you must take adequate measures to protect your website. Below are some of such measures.

Limiting the access to a post

Many websites allow uninhibited access to articles and website posts to ensure that society is well informed. Unfortunately, the scrapping bots and attackers take this opportunity to scrape the data. By allowing access to only a few lines or paragraphs, you ensure the attacker has bits of information that they cannot use. This frustrates them as attackers as they cannot use your quality content. To access the rest of the content, you can ask a user to create an account and log in or subscribe by paying cash. These methods will help identify if the user is a bot or an actual human being while protecting your content from scrappers. If it is a bot, they will not pay the cash but may create the account and log in. Therefore, it is advisable to implement the two measures together.

Protection by using the CAPTCHA

A CAPTCHA is a Turing test designed to tell between humans and bots. They are easy enough that any average human can answer while remaining complicated for the other computers and computer programs like bots to fill. A CAPTCHA can help you protect content scrapping by blocking the suspicious bot areas. They come in various forms, including invisible CAPTCHA, confident CAPTCHA, math problems, entering the characters on the screen, honeypot CAPTCHA, among other CAPTCHA solutions. While security researchers hint they are ineffective in protecting your content from malicious bot actions, they also advise that it is better to have them installed. The only limitation of various CAPTCHA implementations is that they influence a user’s experience negatively.

Protection through traffic monitoring

Through monitoring traffic, you can ensure that all the traffic comes from legitimate sources. If any originates from a suspicious source, you can take measures to investigate and eradicate them. Besides helping you protect your content from scrapping, traffic monitoring helps identify other issues that may pose a threat to your content. They include a denial of service, account takeovers, and scalping. In this method, you can identify the sources and block them. These block any further encroachment of your content by these scrappers.

Adding links to your content

To save your content from scrapers, you can add links within it. This is an easier way to protect your content. When a scrapper copies the content, they leave the links intact. While this protects it, linking the keywords makes your content more engaging to the users. Plugins that enable customizations in RSS feeds, and HTML codes can accomplish this. This ensures that when the attacker posts your content on their website, users get redirected to your site when they click the content and keywords.

Rate limiting

Bots browse the web pages incredibly fast. Because they have to complete the actions first before they are detected, they leverage speed as one option to remain invisible.

Therefore, they can access over 50 pages in seconds. The number of incoming requests when such an activity is detected can help protect your content from scrapping. Besides scrapping protection, limiting the access rates can also prevent a more DDoS attack.

Protecting your content using a bot management solution

Because the attackers use modern techniques when developing the scrapping bots, you also have to match the response by bringing the big guns. Bot management solutions are sophisticated modern utilities that also use modern methods to ensure the safety of your content from bot activities. They differentiate the legitimate bot from a malicious one and take measures to ensure your website, mobile application, or API’s security is fortified. Such solutions like DataDome analyze every request to an API, website, or Mobile application for various bot activities in real-time. When they detect a threat, they use all measures to protect and safeguard the integrity of your content. Besides content scrapping protection, DataDome protects you from other OWASP Automated Threats (OAT).

Other measures include; setting Google alerts when a person tries to copy your content, embedding the content into a media and regularly changing the HTML markup.

Conclusion

Bot developers devise new ways and tricks to develop stealthy bots that are more efficient daily. Therefore, the risks associated with bot activity will not go away in a jiffy. It is for this reason that you need to put mechanisms to protect your content from scrapping. Though no one technique guarantees 100% safety, using a bot solution like DataDome gives better results because it is a real-time tool for bot management.